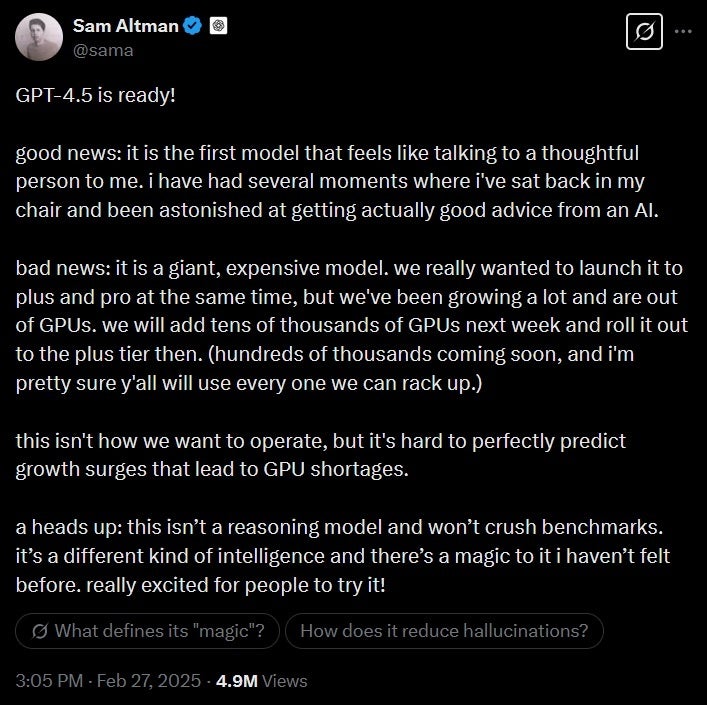

[ad_1] Openai was forced to create the new GPT-4.5 version because it is outside GPU (graphics processing units). These are used to train models, process large amounts of data and make complex accounts. If you are wondering why the CPU chips (CPU) are not used, it is easy to understand the answer. GPUS processing at one time which is more suitable for artificial intelligence matrix operations than the serial processing used by the CPU. Running to Walmart to capture more graphics processing units, similar to how to update our printer ink when we are out, is not a possibility here. This is why Openai is looking to develop its own chips that will leave it less vulnerable to the volatile levels of inventory in NVIDIA. Sam admits that Openai is outside GPU. | Credit image x. Discuss the new GPT-4.5 model in a tweet, Altman notes that it is not a model of thinking and will not set new records. He continues to say that with regard to GPT-4.5, "it is a different kind of intelligence and there is a magic that I have not felt before. Very excited about people to try it!" Altman warns that GPT-4.5 is a "expensive model" costing $ 75 per million input codes and $ 150 per million output symbols. Compared, GPT-4O costs only $ 2.50 per million input codes and $ 10 per million output symbols. The distinctive symbol is a text unit that can be one message, one word, or even numbering marks. Big Language Models (LLM) broke the text into symbols before processing them. Compare GPT-4.5 costs with GPT-4O costs that cost a much less $ 2.50 per million input symbols and $ 10 per million output symbols. If you are wondering how NVIDIA, my beloved Wall Street, has increased by 1748.96 % over the past five years, this is your answer. There is nothing more hot in this street that we call the wall of artificial intelligence and demand for the leading NVIDIA chips that have been growing for the past few years.

[ad_2]

Download

GPU shortage hits OpenAI, delays rollout of ChatGPT-4.5

| Name | |

|---|---|

| Publisher | |

| Genre | News & Magazines |

| Version | |

| Update | March 1, 2025 |

| Get it On |  |