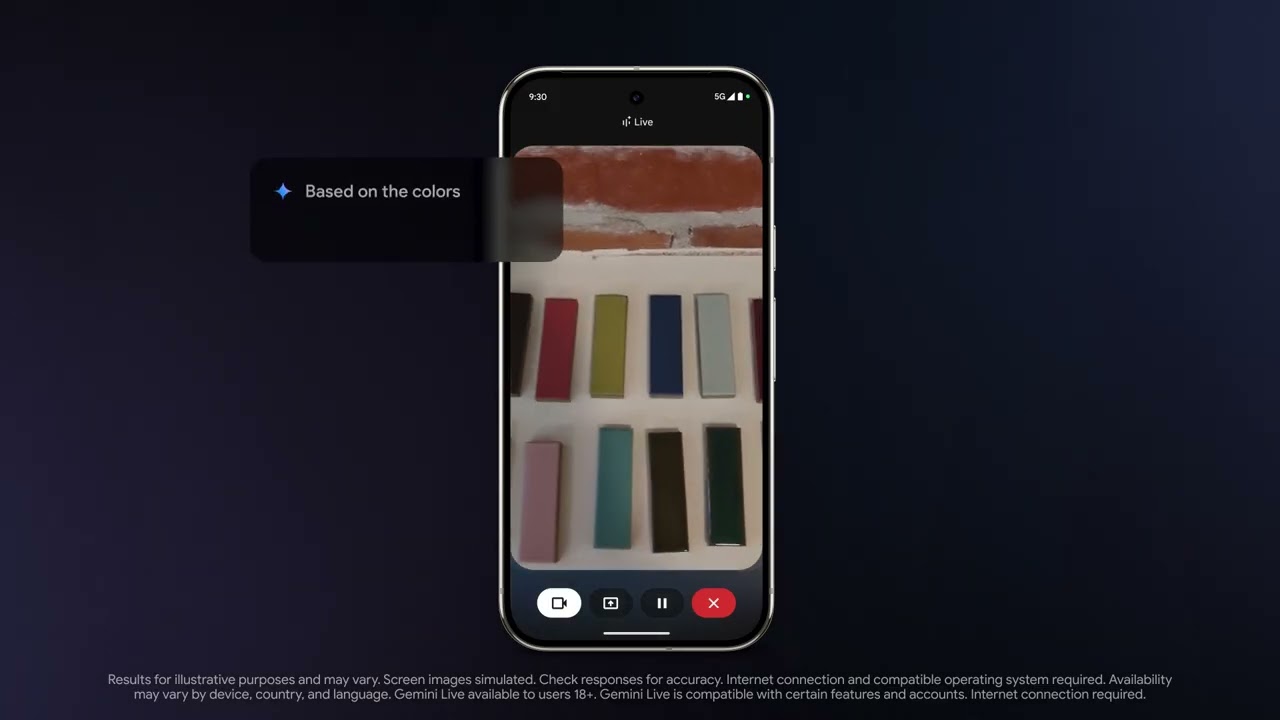

Google has started publishing an innovative AI video in Gemini in an actual time, allowing the platform to explain the visible input from the user device or smartphone camera and provide contextual answers. The confirmation of this publishing comes almost a year after the initial shows of “Project Asra”, which is the basic technology that works with these capabilities During Google I/O 2024, reports from The Reddit user initially showed the appearance of this feature on the Xiaomi phone. This user later shared a video that showcases the new Gemini’s ability to analyze the screen content. This screen reading function is one of the two main features that Google announced in early March to Gueeni Advanced with the Google One Ai Premium plan later in the same month.

The second feature that has been announced by the smartphone camera is used to process a direct video summary, allowing Gemini to understand questions about the user’s circumference and answer them.

This update follows the last introduction to Gemini’s The fabric feature, which helps users in writing and coding tasks, and add podcast summarization tools. This update besides Gemini Live Video really shows the current Google progress when it comes to AI’s assistant technology compared to Apple, Samsung and even Amazon.

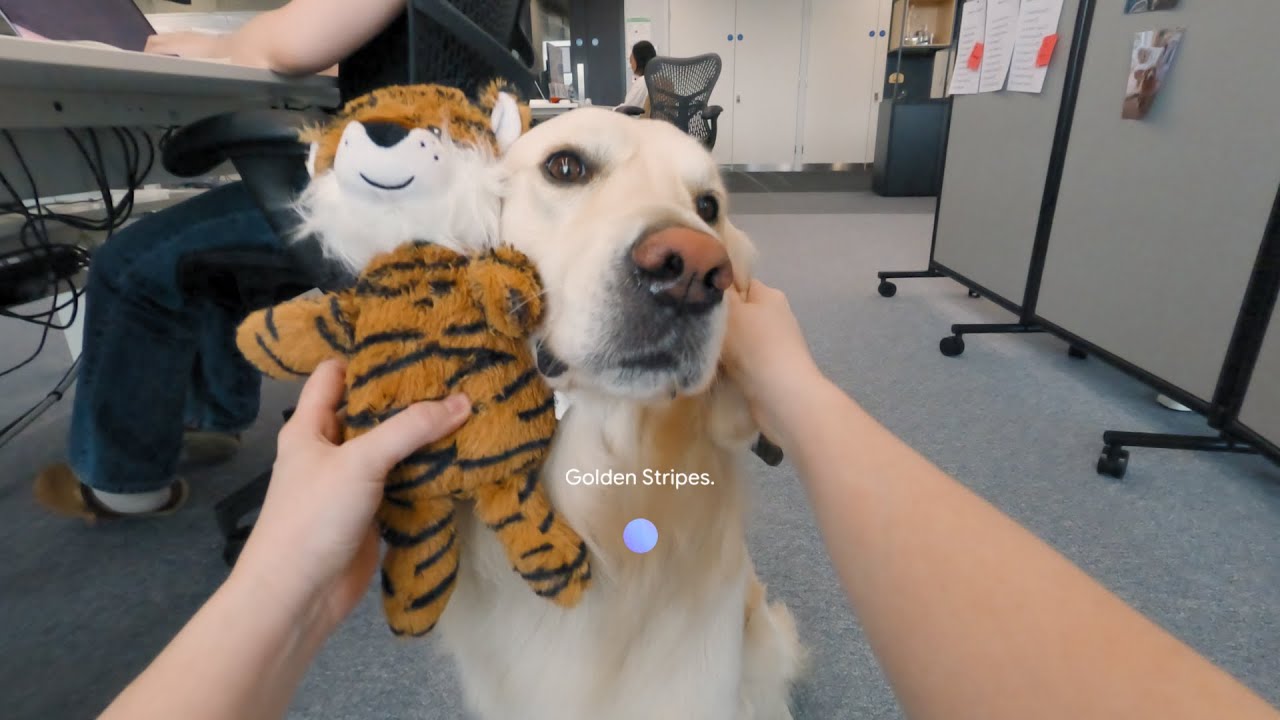

Of course, this does not seem to include every aspect of Project ASTRA as it was tried last year and can be seen in the video above. The full illustration of the assistant memory preserved for the elements you saw across your camera moments, and later told you where you saw that – or even gives you the option to draw on the elements to put more information on the specified area.

However, for me, it seems that we are not far from the future of the artificial intelligence assistant that was shown last year. The rate at which Gueini is progressing in the very promising feature of the collection, and I am very excited to find out how Google will work on it at the Google I/O conference for this year.

Download